In many current systems, 3D player avatars can only be controlled through very basic means such as a small set of pre-recorded motions or a few different facial expressions. To create more involved experiences in virtual worlds it is essential that virtual characters can express their emotions (such as happiness) and physical state (such as tiredness) much more convincingly. In order to achieve this, not only the visualization of these aspects should be realistic, but also the way users control their avatars: in an easy and natural way.

Project lead: Dr.Ir. Arjan Egges, formerly of Utrecht University

Within this work package an integrated framework is being developed in which motion and emotional expressions are combined into a generic approach for affective character animation. This includes the development of new algorithms to automatically compute synchronous facial and body motions that can express a variety of emotions and physical states, with a focus on stronger expressions like laughing, crying, shouting and heavy breathing.

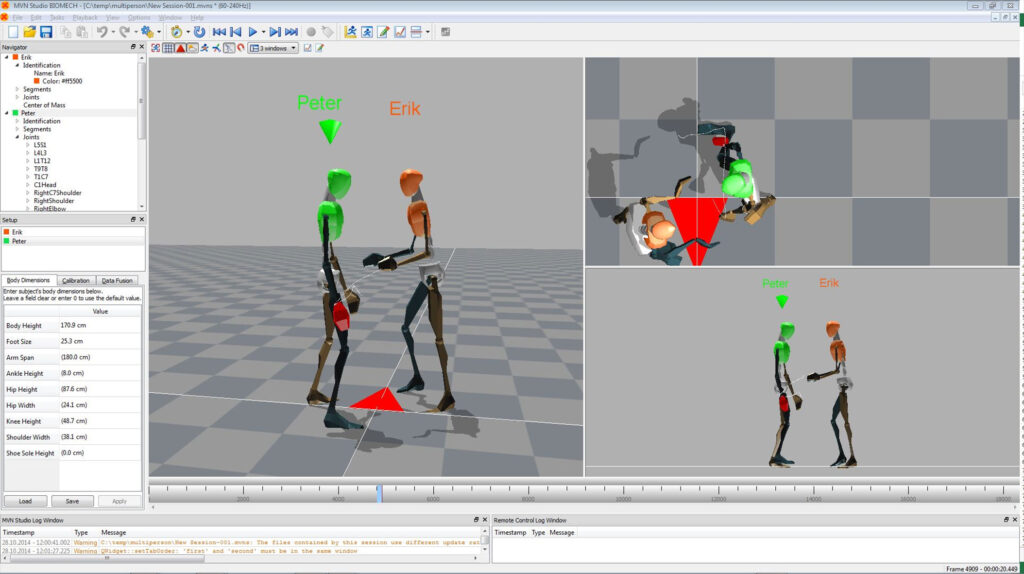

Another development is a mechanism with which users can steer the animation of their avatars through a simple interface such as a few sensors placed on the user’s arms and legs, which will drive an animation engine that translates these signals into similar avatar motions.