In depth: our RAGE project

A conversation with Zerrin Yumak, assistant professor at the Department of Information and Computing Sciences at the Faculty of Science, Utrecht University, under the Interaction Technology division.

Who are we and what is our role in the RAGE project?

I am Zerrin Yumak, assistant professor of computer science at Utrecht University, leading Task 3.2: Embodiment and Physical Interaction in the RAGE project. The task develops assets that support the easy creation of flexible embodiment of virtual characters. We provide virtual character assets in which motions are adapted by specifying constraints. Constraints affect the joint orientations of an underlying skeleton, but other aspects of characters as well. The constraints can be supplied by the environment, the proportions of the character but also the emotional and social context. Enforcing motion constraints requires dealing with non-linearities in the rotational domain and restrictions on the degrees of freedom of the joints. We view motion on a semantic level, where we can specify the meaning of a motion, which is then translated to a motion of the particular character that performs it. Based on studies on how well emotions displayed by virtual characters are perceived, we support more efficiently production of emotional animations.

What is special about our research?

Believability of the virtual human motions is crucial to engage the people and to create an illusion of reality. However, it is not very easy to create believable characters. Motion capture is an effective way to generate real-life motions. Our research is about analysing social and emotional behaviours and turning them into expressive character animations.

For this, we first analyse the behaviours of people in real contexts. For example, we capture and analyse how people behave during group social interactions, how often they look away or they look at the other person, and what kind of non-verbal behaviours they display. We also look into their attitudes and roles in the conversation. By learning patterns from data, using machine learning algorithms, we get some insights into real-life behaviour. Then we use these insights and motion capture data to generate the animations. Starting with the captured motions as a basis, we generate new animations by modifying and combining the existing ones. That requires motion signal processing and constrained motion graph search algorithms.

What we have done so far?

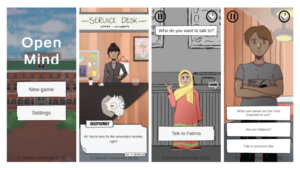

We developed the Virtual Human Controller asset which is a collection of modules that allows to create virtual characters that can talk, perform facial expressions and gestures. The asset provides a quick pipeline to build conversational 3D non-player characters from scratch. It is built on top of the Unity 3D Game Engine (a popular tool for building games) and provides three functionalities: 1) Creating an animatable 3D avatar 2) Individual animation controllers (speech, gaze, gestures) 3) Multimodal animation generation using the Behaviour Mark-up Language. We successfully integrated our asset with the Communicate! dialogue manager. In addition to inter-asset integration, our asset is currently being used by the game developers at BiP Media in Paris and in the interview skills training game for Randstad.

What is next?

Currently, we are working on three research projects. The first one is generating lip-sync animation based on the analysis of linguistic cues and emotional speech given real human audio as input. Another project aims to generate believable gesture motion for non-player characters, based on conversational attitudes such as friendly and conflictive styles, and drives the behaviour based on the pitch and amplitude in the audio files. Last but not least, we are also working on automated social behaviour in group casual conversations by analysing a motion capture database of real human interactions. We look at how people take turns, give the floor and interrupt each other and use these insights to generate synchronised gaze and gesture behaviour.